At Anchor, we help developers deploy internal TLS by building and managing private (aka internal) Certificate Authorities (CAs). It's a lot like a public CA, but just for your organization. Join our private beta and get early access to Anchor.

The most important innovations in infrastructure aren't happening inside the VPC, they're happening at the edge. WebAssembly (Wasm) and WebAssembly System Interface (WASI) let you run your code in a VM that boots quickly with great performance. Combined with a CDN's global presence and scale, you have a compute platform that runs parts of your SaaS workload right next to your customers’ infrastructure.

At Anchor, we’re using Fastly Compute to run a CA-aware caching layer deployed to the edge. This post is about how our caching edge layer lets us serve packages from Fastly’s object store called KV Store while keeping authentication in our frontend SaaS application. Before we dive into that, a quick primer on Wasm and WASI.

Wasm and WASI

Wasm started as a browser technology to run compiled code in a secure sandbox. Per the spec, it’s “a safe, portable, low-level code format designed for efficient execution and compact representation.” It’s like a specialized instruction set architecture (ISA) designed for a browser-tab sized machine, and an application binary interface (ABI) defined by the browser’s Javascript APIs.

WASI interfaces unshackle Wasm from the browser by defining runtimes that can be executed anywhere. The runtime interface acts like the operating system’s syscall interface to the Wasm program. Instead of a full-blown general purpose environment like POSIX, runtimes can be stripped down for special purpose workloads. Fastly’s Compute runtime is an interface specialized for processing web requests.

On Fastly Compute, a Wasm program begins executing with a web request ready to process with a set of APIs for receiving, sending, and proxying requests. It has APIs for interacting with Fastly services like KV Store and Config Store. This API lets us inject code into the request/response lifecycle of every inbound request to anchor.dev.

Use Case and Architecture

We're building a custom caching layer using Fastly Compute. This layer has smart caching of CA assets, and it’s made possible by reusing the same codebase as our backend API for CA operations.

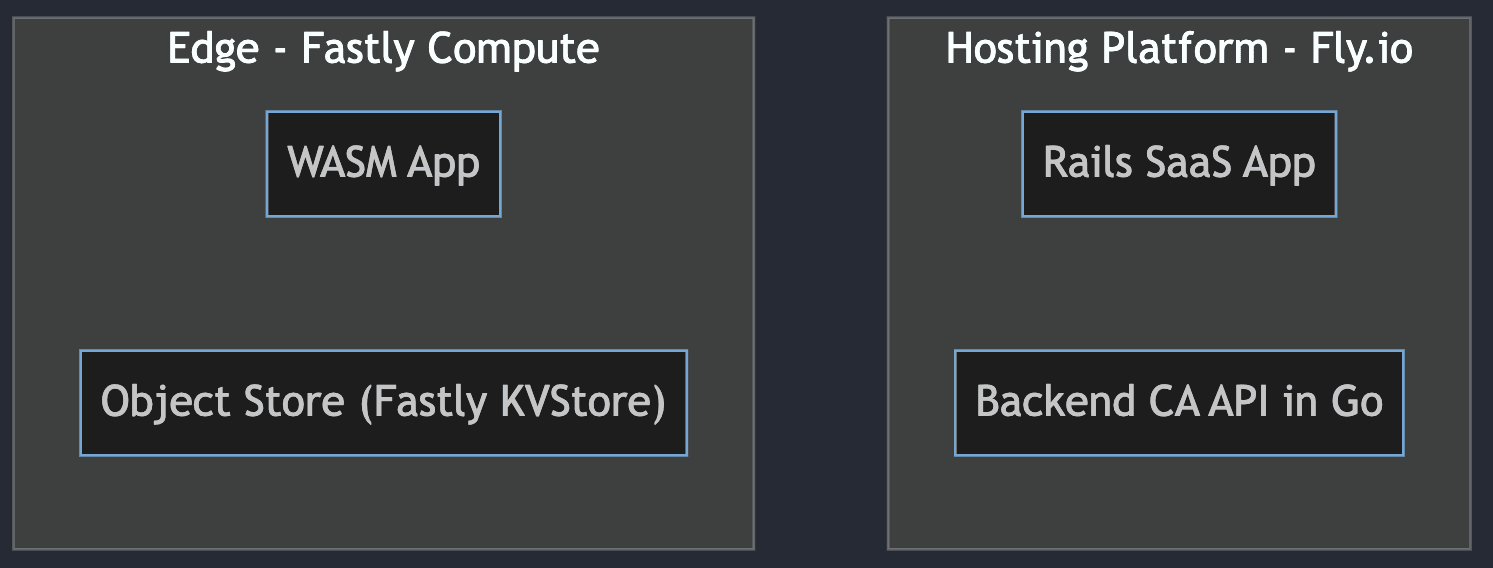

We use a services architecture split between our hosting platform and edge:

The CA API, written in Go. This is our backend, and it generates and signs artifacts like certificates, packages, etc.

A rails frontend SaaS application to manage the business logic, permissions, and authentication/authorization.

Our Fastly edge Wasm component. It implements smart caching of packages and assets.

Generating and Serving Packages

Package generation is core to how Anchor works: we build packages to install and update CA certificates in system and application trust stores. For more on why we do this, check out our post on the Distribution Problem.

Serving packages must be deterministic. Package managers track the content digest of these packages, so we need to make sure we always serve identical package data; serving two slightly different variants of the same package version would cause all kinds of havoc for users.

Our Initial Version

Uploading and serving artifacts directly from an object store is a common way to ensure byte-for-byte fidelity. It removes the need for long-term package reproducibility since the data for older package versions is always available.

We knew we’d eventually upload and serve packages from an object store, but that requires some coordination to track the state of package builds and uploads. Since our initial version could reliably rebuild packages quickly, we punted on serving packages from the object store.

So at first, package bytes were never stored. When the package was created, we’d generate its contents only to calculate its digest, which we’d store in the database. When we served the package, we’d regenerate it on the fly, and compare its digest to the digest in the database.

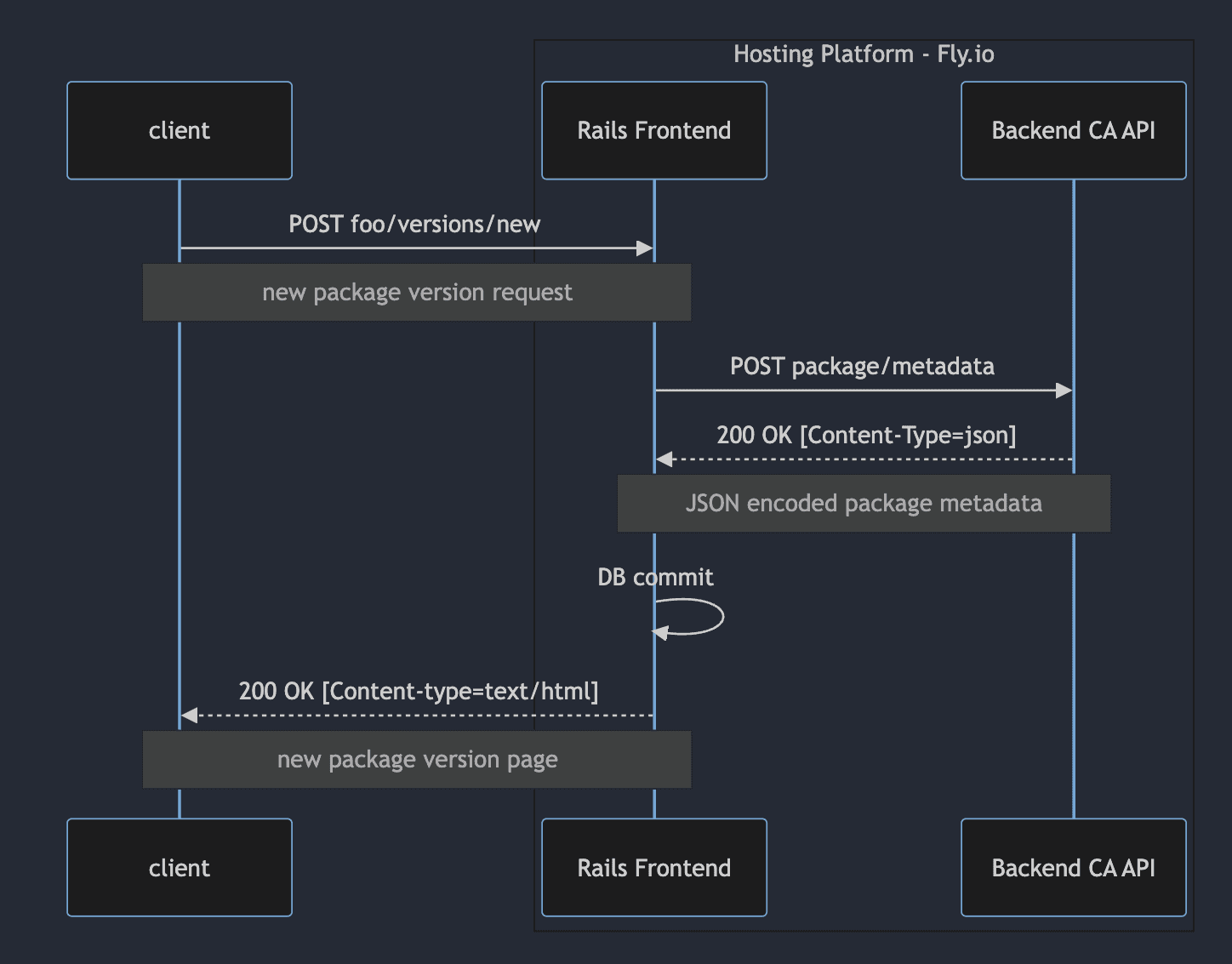

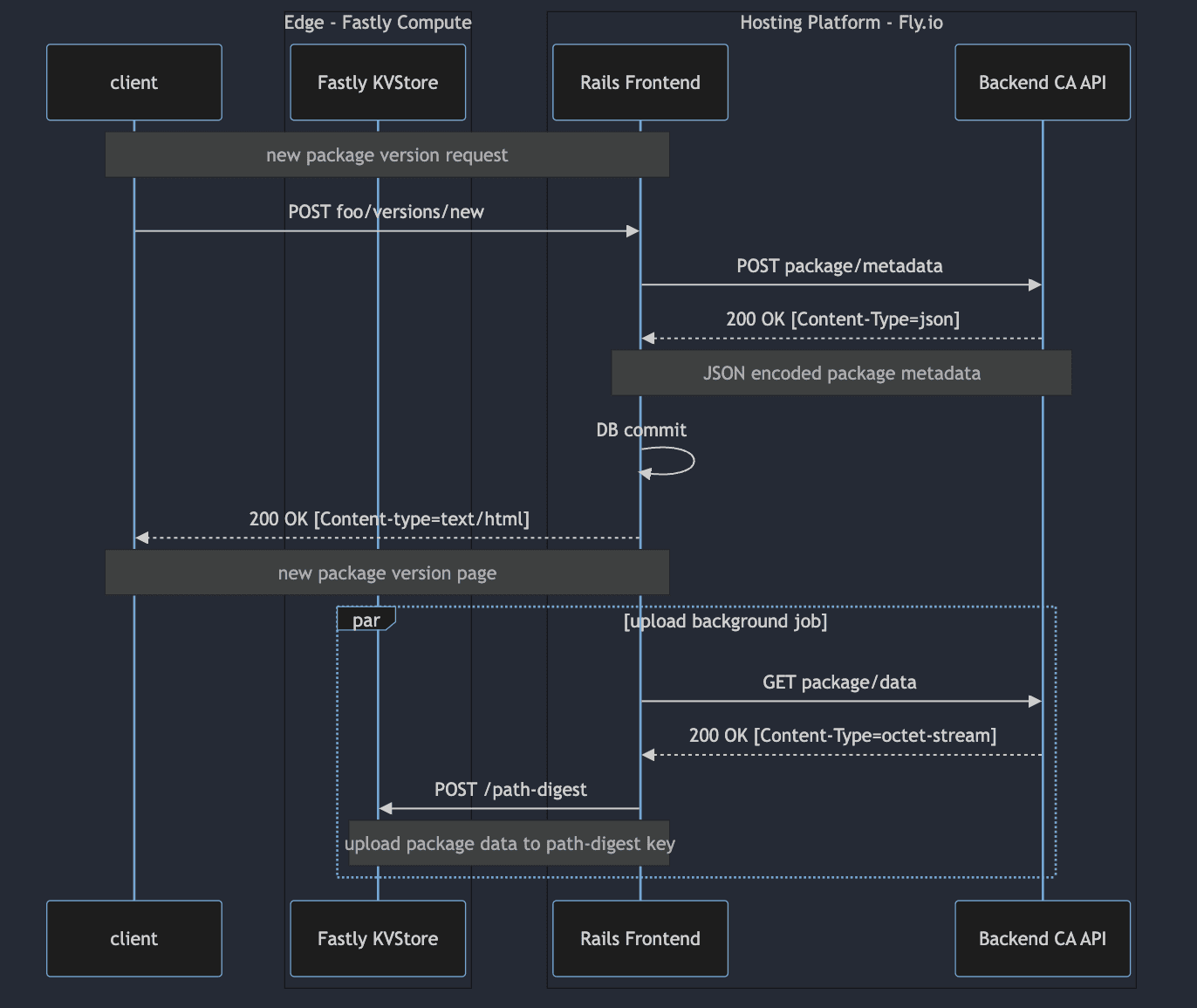

Package Creation

When a user created a new package, the Rails app would assemble the new package record and its associations, and send them as a package metadata request payload to the backend CA API. The API responded with the metadata of the new package, including the content digest, but not the package data itself. The Rails app then committed the package record to the database and served a page back to the user with a download link.

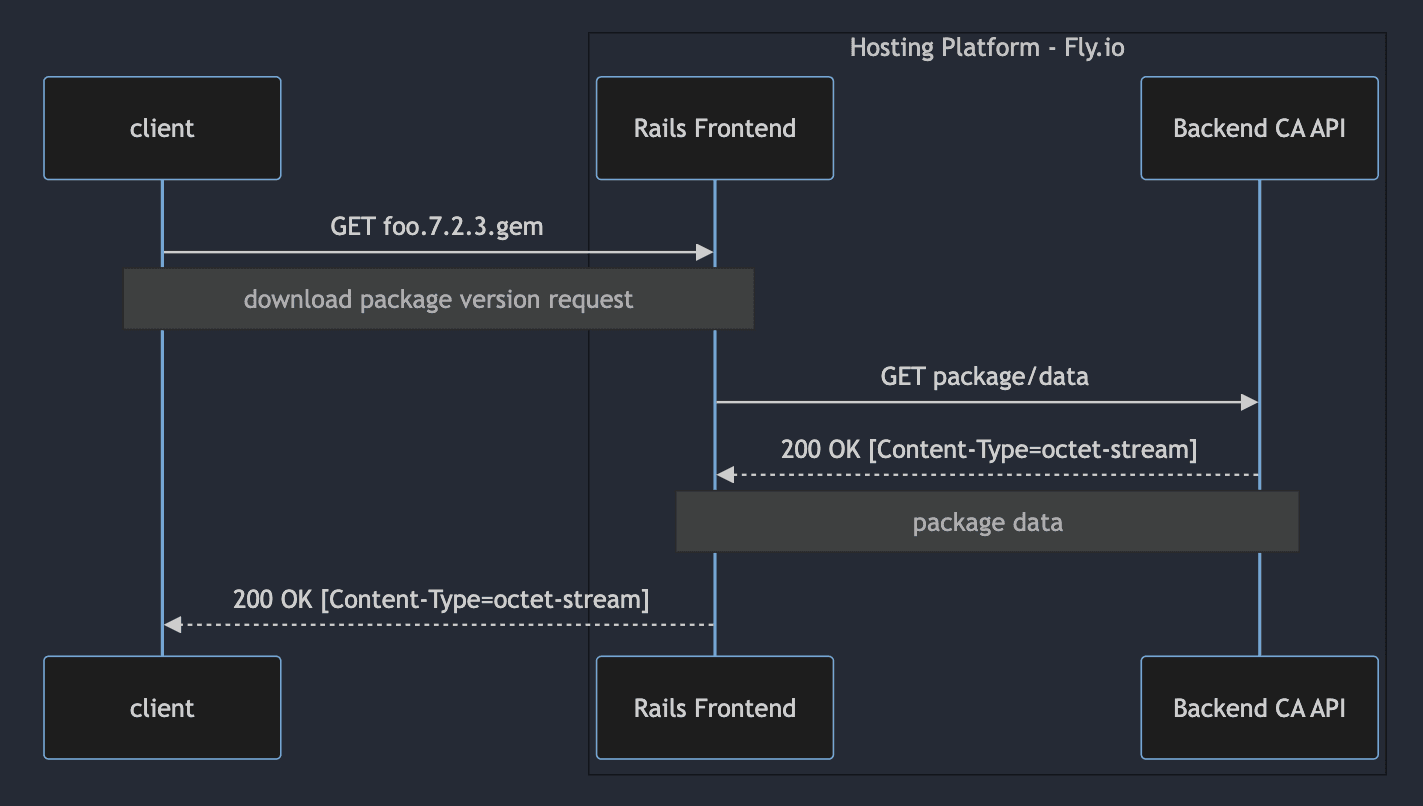

Package Downloads

Package download requests were handled by the Rails app. After another round of authorization, the package record was fetched from the database and sent as a JSON payload in a package request to the backend CA API. But this time the backend request was for the package data. The CA API would regenerate the package (only returning it if the content digest matched), and the Rails app would stream that response downstream.

Upsides, Downsides

This was a MVP version to let us generate and serve packages, and the digests ensured we didn’t serve bad data. But it ossified the package generation code, since even small changes could alter the contents of existing packages.

Also, the package data was streamed through our frontend Rails app. Streaming binary data through a Ruby process has performance issues, and is vulnerable to thundering herd issues.

We were ready to make it better.

Our V2

Aspirations

We wanted to be able to change our package generation code, and we didn’t want our Rails app bogged down streaming package downloads.

It was time to serve the packages directly from an object store. It’s more performant, and it avoids the package fidelity problems.

But we wanted to keep control of the auth logic for these download requests, and that’s not very flexible with most object stores. A common way to retain authentication while offloading asset downloads is to redirect the client to the object store using a pre-signed URL. We could have done this - it would have required some small feature work in the Rails app, but it would be hard for us to test in the way we set up our development environment.

One last minor goal: we wanted to retain the ability to serve download requests immediately, and avoid tracking the state of uploads. This would minimize the amount of components that needed to change.

These constraints informed the updated design of package downloads.

The Gist of Our Approach

Now when a package is generated, we still store its digest in the database, but we also save its contents to the KV store.

Our new edge Wasm component intercepts package download requests and serves them from the KV store. Each request still goes to the rails application for authorization and the content-digest check, but now rails only streams package contents when the package isn’t in the KV store.

Package Creation

Package creation in V2 is largely the same. But now, once the package record is committed to the database, the Rails app enqueues a background job to upload the package. The background job makes another request for the package to the CA API, but for the package data this time. The job uploads the package to the object store (Fastly KV Store) for persistence.

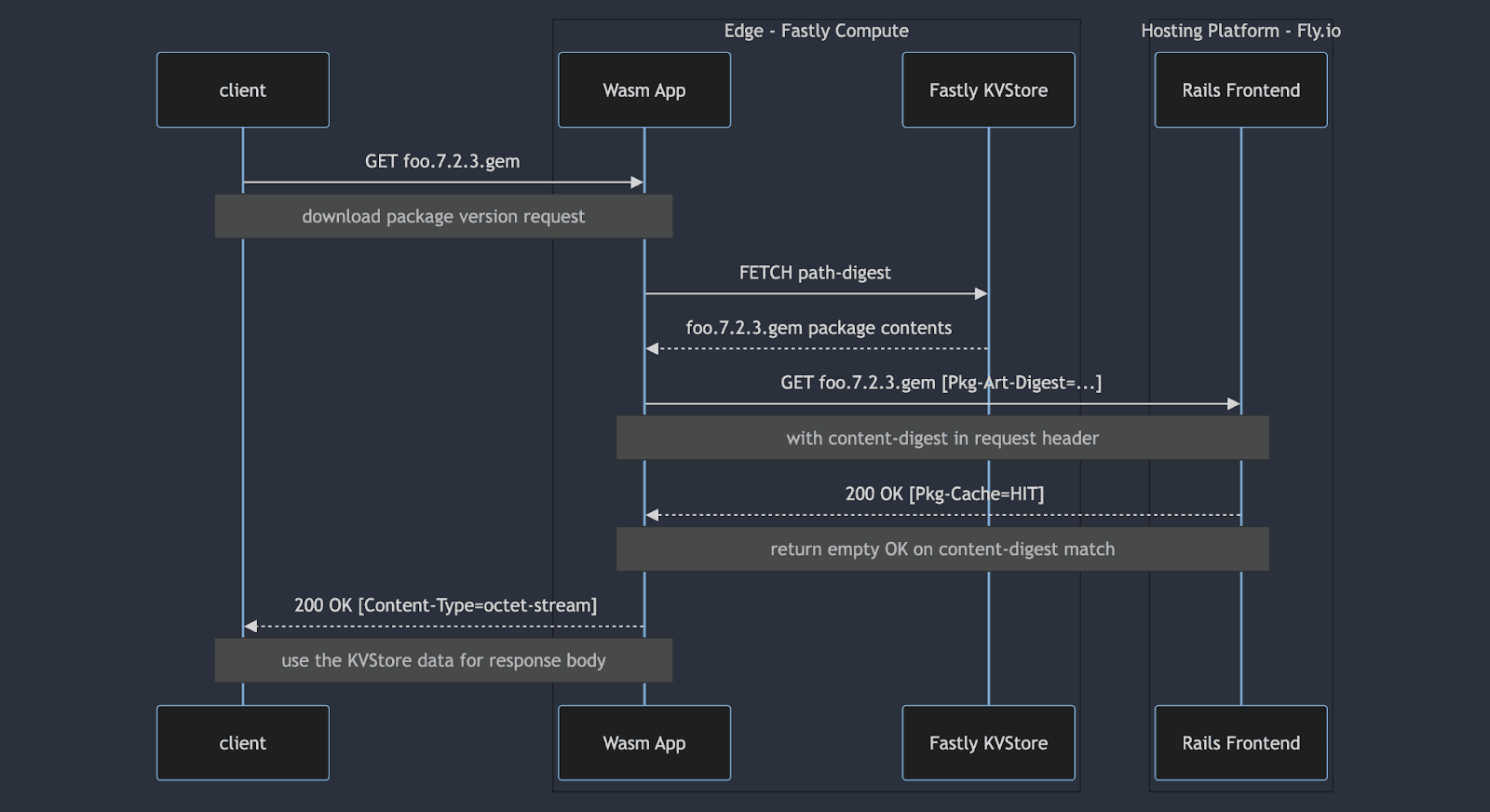

Package Downloads

Package download requests now go through the edge Wasm component first. After detecting a download request, the request path is hashed and the path-digest is used as the lookup key in the Fastly KV Store. On a successful lookup, the package data from the KV Store is hashed and added to the request in a Pkg-Art-Digest header, then proxied to the Rails app.

Our Rails app performs the usual authentication checks and fetches the package record from the database, then checks for the content digest header. If the header value matches the digest in the database, an empty 200 OK response is returned with a Pkg-Cache=HIT header. Back on the edge, if the Wasm component detects the Pkg-Cache=HIT header, it fills in the response body with the package contents from the KV Store.

If the package isn’t found in the object store or the digests don’t match, the downloads revert back to the V1 behavior: the package data is regenerated by the backend CA API and streamed back through the Rails app.

Advantages

This works with our existing authentication mechanisms (cookies and download tokens), and keeps authentication logic in rails, with familiar tools: we didn't need to duplicate any authn/authz code in the Wasm component.

It falls back gracefully to the existing package generation if the upload to the object store hasn't finished, or the queue is backed up for any reason. We’re protected from thundering herds, and downloads are faster because the data is closer to the client.

And we have the ability to test this end-to-end locally with integration tests instead of a mock object store, thanks to the testing tools available for Fastly Compute.

Simulation and Testing with Viceroy

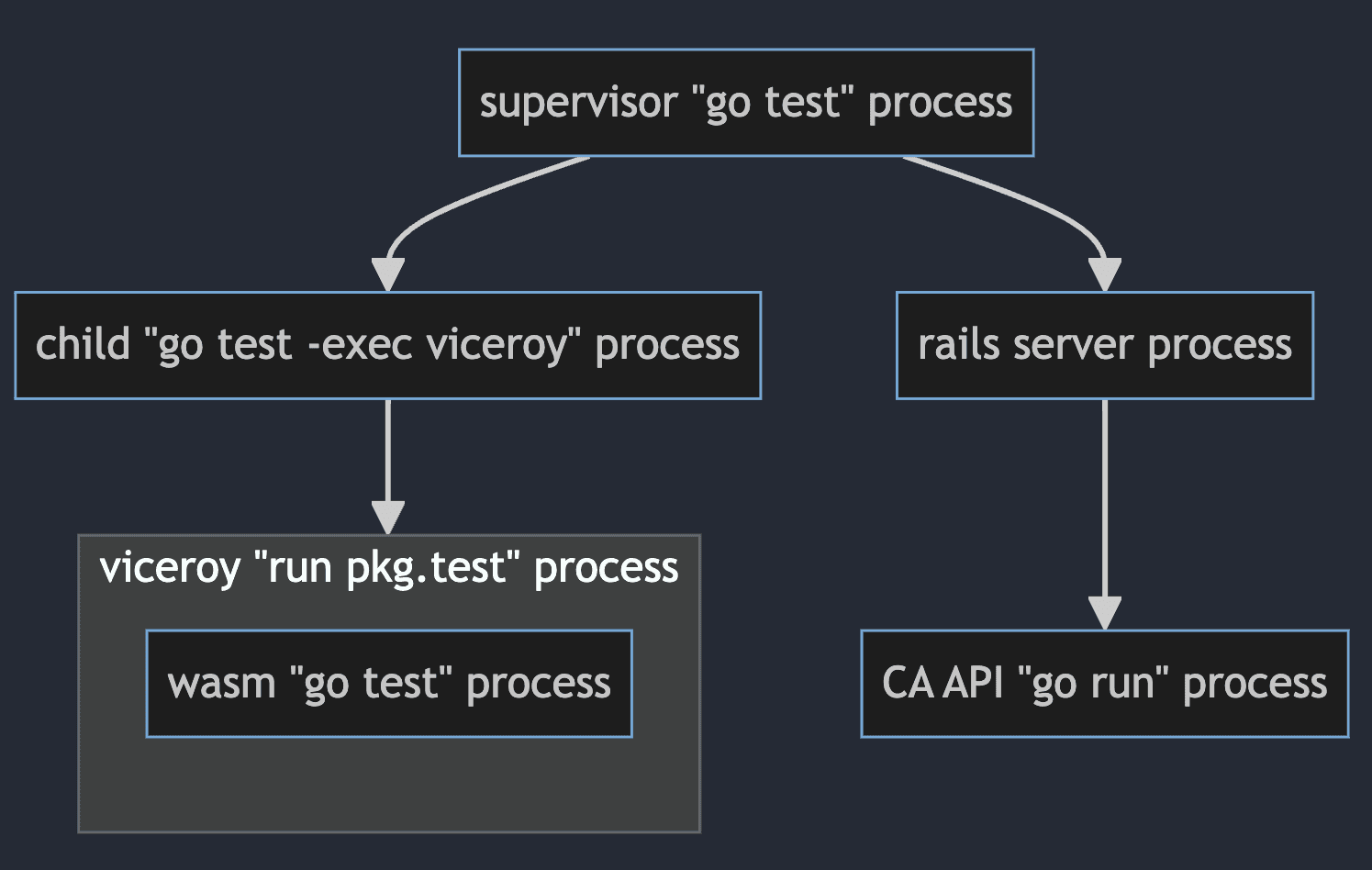

We take dev/prod parity very seriously. (It's one of the reasons we built lcl.host.) Downloading a package can involve a Wasm-based edge component, our Rails application for SaaS business logic, and our Go-based API for generating the package contents. How do you reliably test this stack?

Enter Viceroy, the unsung hero of Fastly’s Edge Compute platform. Viceroy is a single binary that wears many hats: it's a hypervisor, reverse proxy, and test environment all in one. It integrates into Go's test toolchain, so we can use Viceroy as the execution context for end to end tests. We get dev/prod parity, and our tests don’t run against mocks or test fixtures.

We do this by running the edge Wasm code inside the Viceroy hypervisor, which proxies requests to a local deploy of our rails app. Here’s what the process hierarchy looks like for a test run:

These tests run like an ordinary go test ./… run on our system, but we have wired up some test helpers for Viceroy. It uses the test’s Main entrypoint to rebuild and re-run the test process, but this time using Viceroy as the test executor. Here’s what a sample TestMain looks like:

This example starts an http server on the host, and points Viceroy’s test-backend origin to it. In the test function code, a handler can send a test request to a backend and Viceroy will bridge that request from the Wasm layer to the server on the host:

This gives us end-to-end testing of our entire stack. Requests for package downloads can originate from the Wasm test process and exercise code paths in the Rails app and CA API. Here is the repo for the Go package we use to run these tests:

https://github.com/anchordotdev/fastlytest

Conclusion

Building a core infra startup with limited resources is not simple or easy, but Fastly Compute makes it possible for us. We get to leverage their global infrastructure and move portions of our SaaS workload to the edge, while keeping important business logic in our frontend app and backend API. And the performance is great - maybe you noticed since this blog post was served to you by our same Wasm component! 🔥.

Combining this novel infra platform with an accurate testing environment gives us confidence that features are working correctly across multiple components in development, without having to test in staging or production environments. In fact, the new package downloads worked as expected the first time we deployed it to staging, thanks to rigorous testing that Viceroy makes possible. Read about what we’ve built from Fastly’s perspective in the Fastly Compute case study on Anchor.

We built package caching on top of Fastly Compute along sideas a precursor to a true CA-aware caching layer. This caching layer allows us to provide high availability for certificate provisioning and resources that TLS connections depend on–the kinds of guarantees that only public CAs operating at scale can provide today. We’ll talk more about what it means to build a CA-aware caching layer in later posts, and why the features of Fastly Compute are critical to our business.

Join our private beta and get early access to Anchor. And try out lcl.host (powered by Anchor) in your development environment right now.